On the brink of the singularity

For close to four billion years every single organism has been subject to the laws of natural selection. At the beginning of the twenty first century this is no longer true. Homo Sapiens has transcended those limits. Up until now, Intelligent Design was not an option. We could use selective breeding to enhance most organisms but were still subject to genetic codes. Today laboratories throughout the world are engineering living beings, breaking with natural selection – in 2000, Eduardo Kac engineered a fluorescent green rabbit.

At present the replacement of natural selection by intelligent design could happen in any of three ways: through biological engineering, cyborg engineering (combining organic with inorganic parts) or the engineering of inorganic life.

Biological engineering is intervention at the biological level by implanting a gene, altering the shape, capabilities, needs or desires in order to realise some preconceived cultural ideal – genetic engineering to produce a race of supermen, soldiers or slaves for example.

Cyborg engineering has been carried out for some years now – eyeglasses, hearing aids, pacemakers, bionic hands, etc. However currently DARPA has been experimenting with implanting chips, processors and detectors into cockroaches, enabling humans to control their movements in order to spy on other people. Humans are being grafted with bionic ears, retinal implants, micro computers in the brain to enable those who have lost a limb to operate a bionic arm or leg by thought alone.

A two-way brain-computer interface is currently being developed which it is hoped will allow the transfer of all thought processes to a computer. The next step envisaged would be to link a brain directly to the internet. A question of ethics becomes obvious here – if all a person’s thoughts are transferred to a computer and then that person dies, would the computer then become that person? Are we directly associated with our thoughts or does a soul really come into the equation?

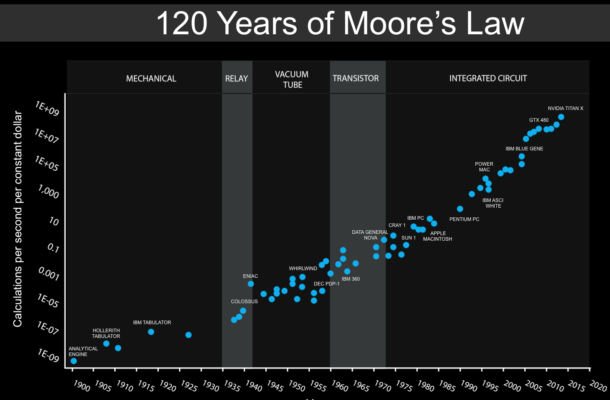

Engineering of inorganic life, as in the example of a computer program which can undergo independent evolution (AI) has been carried out since the 1980’s, becoming more complex year by year. AI can now teach itself and is free to evolve without any help from its original programmers.

Artificial Intelligence has reached what is known as Singularity which means that it is now possible to generate a program equal in intelligence to us. Not only does that mean that we now have a powerful ally, we also have a competitor. Machines can now become aware. We are incorporating them into our bodies and, through ‘neuromorphic electronics’ incorporate ourselves into them. The distinction between man and machine is becoming blurred.

This symbiosis brings in to question its ethics and moral responsibilities. The question needs more than a political response, it requires a broad range scientific analysis before the machines become more powerful. Morals and ethics are human characteristics since they stem from tribal community interaction and hence cannot be logically incorporated into non-human identities; they cannot be precisely defined and therefore it is not possible to incorporate them into a computer program.

It is relatively easy to write a computer program with the ability to learn, and to modify its own programming. However, when that occurs, the computer’s modifications are not always clear to other programmers – the language may be familiar but the logic can be obtuse. Computer languages rely on logic – if this then that.

Some years ago an AI program was analysed to find out what changes the machine had made to itself. One piece of coding made no sense – it came to an end for no apparent reason after doing nothing with any data. It was therefore considered redundant and deleted. The main program then stopped working: the logic was beyond the understanding of the programming staff. This shows that even if we analyse the program of an AI there is a good chance that the overall concept of the logic will be missed.

From this fact we are aware that we can no longer foresee the end results of any input to an AI with any degree of certainty. We are therefore leaving ourselves open to unforeseen consequences. When this happens, who is to blame? The original programming team? The AI itself, even though it is only a machine carrying out its assigned tasks?

Not only can the program be called into question, the input is also often an unknown. In order to learn, an AI needs a tremendous amount of data. A new born infant absorbs a vast quantity of information in the first few years of its life – how to use its limbs, what is solid and near, hot or cold, what can hurt, etc.

In the same way an AI starts off by knowing nothing and has to either be given or given access to data – some it can determine itself i.e. by playing ‘Go’ against itself thousands of times; some can be given deliberately i.e. for facial recognition, showing it millions of photos; and some by giving it a free rein i.e. giving it access to the internet.

This last is the most dangerous because then its ‘controllers’ have no knowledge what it has had access to. Because of the amount of data required, it is not always feasible to control all the input.

Even when the programmers do manage the input data we all have internal biases which we are often unaware of. In the case of facial recognition for instance it was found that the resulting AI made a number of errors with coloured people. The bias of the white, mainly male programmers came through. The Chinese are also having difficulty in this regard.

Because of the volume of data required there can be no absolute guarantee that it is all accurate, up to date or even particularly relevant. The last is important because data which is currently not relevant is often retained and could be referenced at a later stage out of context and hence possibly misinterpreted. Not very long ago Facebook found two of its AIs were talking to each other in a language they had developed between themselves and which the programmers could not decipher, so they closed both programs down.

The obvious questions to ask are a) why did the programs develop their own language; b) what were they communicating to each other and c) why did they not want the programmers to know? One can get quite paranoid about things like that.

Our lives are currently being controlled by various AIs – electricity distribution, aircraft flight patterns, water, gas, traffic flow, mass production factories for food and other consumables. Our way of life, even our lives depend on computers and yet there is very little control over the basic programming concepts of many of these devices.

Maybe the time has come when we should take a closer look at where we are headed – it is now only a short step to analyse a person’s brain patterns to such a degree of accuracy that they could be copied and placed in a computer so that when that person dies, his/her thoughts, ideas, etc could continue. This concept has already been discussed for great scientists, thinkers and even a dictator. When the human dies, can the AI information of his/her brain be considered to be a logical extension of him/her? If so who is responsible for any decisions it makes?

Alan Stevenson spent four years in the Royal Australian Navy; four years at a seminary in Brisbane and the rest of his life in computers as an operator, programmer and systems analyst. His interests include popular science, travel, philosophy and writing for Open Forum.

Max Thomas

June 9, 2022 at 11:41 am

Interesting perspective Alan, thank you. A conversation between Steven Hawking and Elon Musk could not, of course, occur without the brain-computer interface that you envisage. However, both made their misgivings clear about the potential for AI to cause the ultimate demise of human existence as we understand it.

The late Stephen Hawking went so far as telling the BBC that “the development of full artificial intelligence, could spell the end of the human race”.

Hawkins’ concerns centred on the idea of machines imitating and even displacing human thinking. He worried that AI machines might “take off” on their own, modifying themselves and independently designing and building ever more capable systems. Humans, bound by the slow pace of biological evolution, would be tragically outwitted.

Elon Musk considers AI as the scariest problem. He cautions that AI will rapidly become as clever as humans and once it does, humankind’s existence will be at stake.

Musk says adopting AI is like “Summoning the Demon”. He believes that artificial intelligence can evoke the next world war and result in dominating the world and that robot leadership is a threat to the world. He also warns that the world will never be able to escape when those intelligent AI’s will become deathless authoritarians. One of his statements included that “we need to be super careful with AI as they are potentially more dangerous than nukes”. He also recommends being energetic in regulation rather than being reactive when everything ends.

He believes that development of AI should be done with improved regulations and controlling structure. He wants AI to be controlled and structured even for his own brand Tesla.

You’ll probably remember an earlier exchange we had on this topic, complete with humour, something which AI could never countenance.

https://www.openforum.com.au/rage-against-the-machine/