The art of avoiding technological traps

Over the past 200 years, the world has become demonstrably more connected. Rising prosperity and innovation have aligned with cycles of industrial and technological development. But along with the obvious benefits of such innovation have come a range of unexpected and often calamitous emergency situations that remind us that certainty is often a scarce commodity.

Strongly linked to certainty are the dual notions of safety and danger as society pursues innovation and the benefits of technological development, it must also deal with an increased likelihood of unanticipated disruptions in both human and technological systems that are by design and use becoming more detailed and complex.

The role of government in preventing such disruptions is critical in that the modern state as functional provider of public administration and legislative oversight derives validity from the promise that it is an effective (and preferred) means of providing safety (and certainty) to the populace.

This role is made more important because of the reality that in representative democracies (and those not so representative), many decisions with the potential to affect populations are made by government officials or regulators. Such power differentials can undermine people’s trust in government because they are often so far removed from the decision-making process.

It’s reasonable to expect also that innovation (through the application of new technology to problems or the use of old technology in new ways) should hold few surprises for beneficiaries. An extension of the notion that the government ‘makes us safe’ is the expectation that it also licenses and regulates the use of much technology and by doing so provides a degree of certainty about the technology’s reliability and safety.

It’s in this context that technology assessment and foresight are critical for Australia. This especially holds true in relation to reducing the likelihood that incidents or issues involving the unexpected impact of technology and related systems develop into significant disruptions.

A notable example of such a combination of technology assessment and futures thinking was embodied in the US Congressional Office of Technology Assessment. The OTA was bipartisan and was established to examine issues involving new or expanding technologies, assess their impacts (positive and negative), and then analyse options to avert crises from their misuse or unintended consequences from their use.

The OTA was defunded in 1995 after 23 years of service—some suggest as collateral damage from congressional power politics in play at the time.

Over its life, the OTA produced more than 700 studies on topics relevant to governance and decision-making ranging across a broad science and technology terrain. Its demise still resonates among US policy and governance professionals and there have been recent callsto resurrect it.

Technology assessment agencies similar to the OTA (possibly influenced by it) operate in Europe. Notable examples exist in the European Parliament, the German Bundestag, Denmark and Norway.

While Australia is active in technical assessment, particularly in the areas of health and workplace safety, coverage of technology assessments of the scope carried out by the OTA and other international bodies is generally absent.

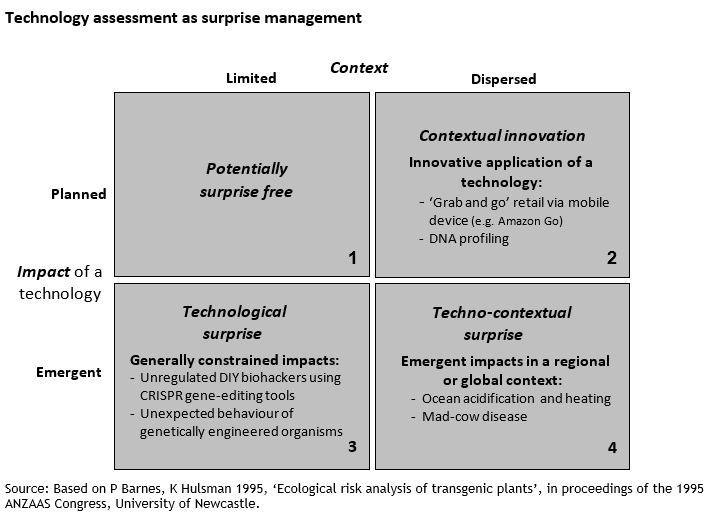

What nuanced information could Australia get from well-tuned technology assessment? The matrix below suggests a type of analytical view that should be possible. The framework contrasts the impact of a technology (planned or emergent) against the context (limited or dispersed) of possible impacts from a societal regulatory and safety perspective.

A suitable goal of effective technology management and foresight in support of both innovation and regulatory safety would be to minimise the likelihood of generating outcomes such as those shown in quadrants 3 and 4, while promoting policies and practices that seek to support achieving the relatively benign states of play in quadrants 1 and 2.

The emergence of surprise impacts like those in quadrants 3 and 4 may be difficult to predict because of combinations of unnoticed trends, unanticipated synergism between components of complex systems, or abrupt shifts in previously stable systems or contexts.

A further factor is that the effects of techno-contextual surprises, in addition to having wide geographic footprints, may continue for long periods, potentially spanning generations.

Emergent disruptive phenomena such as climate variability, disease outbreaks, or the increasing complexity of embedded information and communications technology—together with emergent interdependencies within and across systems of infrastructure systems—create significant problems of governance for both the private and public sectors.

Some technological surprises might be preventable by design, although foresight or horizon scanning is likely to be needed for instances where human ingenuity is in play or product functionality derives from the convergence of more than one technology component.

Disruptions in complex contexts are likely to generate cascading impacts that manifest in unexpected ways. Institutions might also be unlikely to face single incidents but rather systemic failures, often appearing concurrently as ‘network’ effects.

What can the Australian government do? It might consider establishing its own office of technology assessment to support the provision of timely targeted advice to elected officials and to those within relevant public- and private-sector institutions.

Such an office would assist in making sense of the potential for counterintuitive disruptions that emerge from the interplay between modern life and the technologies we all use—as well as the new innovations that are always appearing in the horizon. Such anticipatory thinking should also be part of national risk assessment capabilities.

This article was published by The Strategist.

Dr Paul Barnes is a Research Fellow specialising in urbanisation and disaster resilience in the Institute of Global Development at the University of New South Wales. He previously established the Risk & Resilience Program at the Australian Strategic Policy Institute.