Facing up to facial recognition

But as governments move to leverage technology to fight COVID-19, we should be mindful that this scramble could open the door to technologies that will impact society in ways that are more profound and far-reaching than the pandemic itself.

Consider the use of facial recognition technologies.

Some of the world’s leading technology and surveillance companies have released new facial recognition tools.

These are systems that scan images and videos for people’s faces, and either attempt to classify them or make assessments of their character.

Some of the world’s leading technology and surveillance companies have recently released new facial recognition tools that – with a high degree of accuracy – claim to be able to identify people even when they are wearing masks.

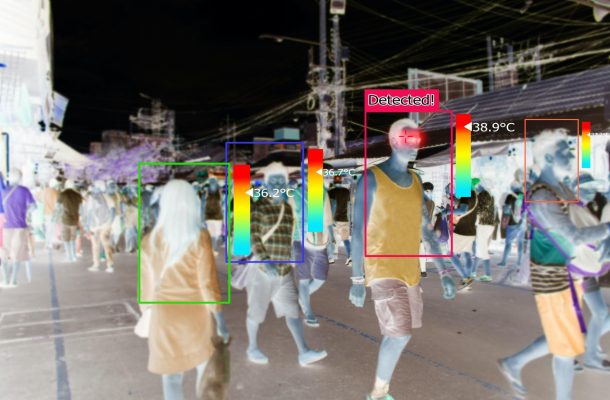

Similarly, thermal imaging equipment is being proposed to identify potential COVID-19 carriers by tracking the temperature of their face. In fact, London Heathrow Airport has just announced it will use the technology to carry out large-scale passenger temperature checks.

This capability is increasingly being retrofitted into ubiquitous CCTV systems. China, Russia, India and South Korea are notorious leaders in this space.

Some authorities are now able to identify patients with an elevated temperature, revisit their location history through automated analyses of CCTV footage, and automatically identify (and notify) those who may have been exposed to the pathogen.

And, yes, some of these are positive moves in an effort to rid societies of COVID-19. It is challenging to argue that this in itself is not a noble cause.

Indeed, the enormous uptake of contact tracing applications around the world is a sign that many of us seem willing to surrender some of our privacy in order to curb the spread.

Thermal imaging equipment is being used to identify potential COVID-19 carriers.

But a real concern is the unanswered legal, constitutional and democratic questions that these apps introduce.

So, let’s pause for a minute, because now is the right time to question these technologies, review some historical precedents and think about what the ‘new normal’ may be when the world attempts to return to its former state.

False positives and discrimination

Most of these new facial recognition tools ignore the significant risk for discrimination and persecution, the possibility of false positives or negatives and general inaccuracy of the technology due to insufficient testing.

Picture the consequences of an automated system falsely identifying you as someone with an elevated body temperature, just as you return from a weekly grocery run.

These false positives are not unlikely. Infrared imaging equipment may be broken or used incorrectly, readings can be misinterpreted by a human reviewer or – even worse – by an automated process over which you have no control.

When facial recognition is involved, false positives are even more likely as datasets may have been too small to begin with or haphazardly developed.

Some new facial recognition tools claim to be able to identify people even when they are wearing masks.

In most democratic nations, these occurrences may ‘only’ lead to a benign outcome like compulsory self-quarantine, but other governments may decide to publicly shame those that have violated rules or limit their freedom to move.

We’ve been here before

The application of facial recognition systems to fight COVID-19 adds another blot to the CV of this technology.

Recent examples that have been widely criticised include systems that use facial images to distinguish sexual preferences, identify a person’s ethnic groups and spot academics in a crowd.

Studies emphasise that the practice of classifying humans based on visible differences has a troubled past with roots in eugenics – a movement or philosophy that believes things like selective breeding can improve the genetic composition of the human race.

It’s a belief that has resulted in numerous examples of oppression in the past.

The practice of ‘reading’ human portraits began under Swiss minister Johann Kaspar Lavater in the late-1700s and English scientist Sir Francis Galton in the mid-1800s. Championing eugenics, Galton put in motion a global movement to quantify facial characteristics as indicators of a person’s psychology.

But the early 1900s saw a new, worrying twist.

During an already unsettled time in Europe, Italian criminologist Cesare Lombroso proposed pre-screening and isolation of people with “disciplinary shortcomings” to be enshrined in law. Physiognomy, the practice of presuming mental character from facial appearance, was now mainstream.

Some capabilities are increasingly being retrofitted into ubiquitous CCTV systems.

Only a few decades later physiognomic thought proved influential in the rise of Nazi Germany.

These ideas from the past have proven to be remarkably tenacious.

They reappeared in a now-withdrawn 2016 article on using artificial intelligence (AI) to distinguish criminal faces from noncriminal faces. Even in 2020, a recent article inspired by Lombroso’s work claimed that it was possible to determine criminal tendency by analysing the shape of a person’s face, eyebrows, top of the eye, pupils, nostrils, and lips in a dataset of 5,000 greyscale ‘poker faces’.

And suddenly, it seems we’re back in 18th century Europe.

Make facial recognition great again?

More than ever, the COVID-19 pandemic illustrates that we’re at a crossroads.

Technologies are being developed and used to fight an invisible enemy and counteract the spread of a terrible disease; but we’re also at risk of irreversibly releasing oppressive systems onto society that are not in the best interest of public life.

The team at the Interaction Design Lab developed Biometric Mirror, a speculative and deliberately controversial facial recognition technology designed to get people thinking about the ethics of new technologies and AI.

There is no widespread public understanding of the way facial recognition works.

Thousands of people tried our application, from schools to the World Economic Forum and World Bank, as well as the Science Gallery Melbourne and NGV.

What we found is that there is no widespread public understanding of the way facial recognition technology works, what its limitations are and how it can be misused.

This is worrying.

The Australian Government’s 2018 commitment to support the delivery of Artificial Intelligence programs in schools is a welcome response. As young people face growing needs to be technologically literate, school programs must respond to emerging innovations, trends and needs.

School programs must also empower young people to think critically about innovations, how they work and how they are compromised.

But questions remain over whether budgets will allow for future growth of these programs.

When the COVID-19 pandemic ends, we must be mindful of the type of future society we want to live in. Do we want our old one back, or do we want a new one where widespread surveillance is the norm in the name of public health?

This article was written by Dr Niels Wouters and Dr Ryan Kelly of the University of Melbourne and published by Pursuit.

Dr. Niels Wouters is Head of Research and Emerging Practice for Science Gallery Melbourne, and Research Fellow in the Interaction Design Lab at the University of Melbourne. In his role, he promotes collisions between arts and science as a mechanism to expose the breadth of STEM.