AI apocalypse or overblown hype?

The tidal wave of predictions that generative artificial intelligence (AI) created last year shows no signs of abating.

Social media, traditional media and water cooler conversations are awash with predictions about AI’s implications, including the risks and dangers of so-called large language models like ChatGPT.

There are many predictions out there about AI’s implications, including the risks of so-called large language models.

Soothsayers are having a field day with headlines like “Open AI’s ChatGPT will change the world”; “First your job, and then your life!”; and “30 ways for your business to survive the AI revolution”.

Some countries are acting over concerns about generative AI. Italy temporarily banned ChatGPT over privacy concerns. It is blocked in China, Iran and Syria.

Other AI luminaries are also expressing concern. AI pioneer Geoffrey Hinton resigned from Google so that he could speak freely about the technology’s dangers.

“I don’t think they should scale this up more until they have understood whether they can control it,” Hinton said.

Hinton’s remarks followed an open letter signed by another ‘Godfather of AI’, Yoshua Bengio, as well as Elon Musk and others, which called for a pause on the development of AI models more advanced than GPT-4.

More recently, Samuel Altman from OpenAI appealed to US lawmakers for greater regulation to prevent AI’s possible harms.

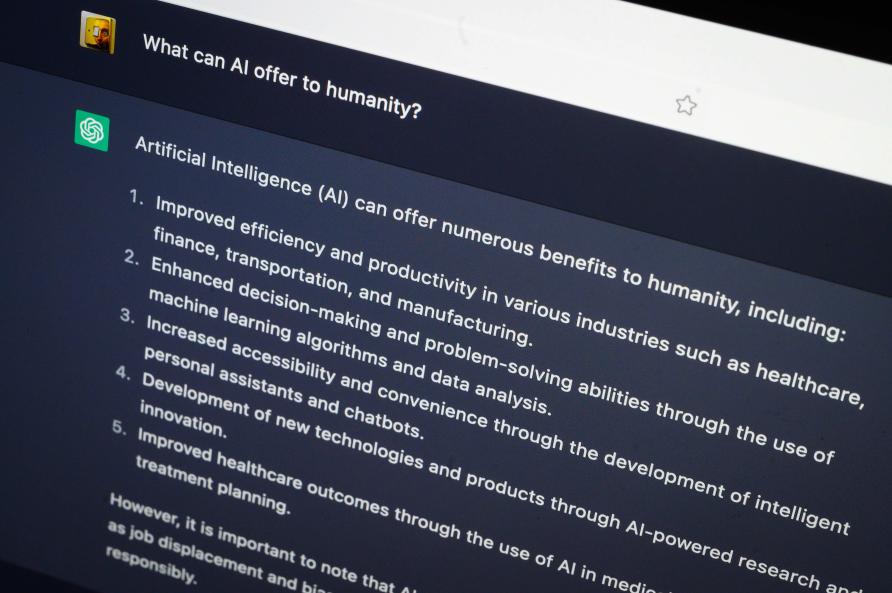

Of course, many individuals and Big Tech companies contend that AI can be a force for good. For example, AI might provide creative assistance in writing and image generation. Professionals in many sectors are already integrating tools like Google’s Bard into their work.

Samuel Altman, CEO of OpenAI, before the Senate Judiciary Subcommittee on Privacy, Technology, and the Law.

All the hubbub raises the question: are we truly at an AI turning point? Is it the beginning of the end for humanity or a passing fad? Should we be worried about emerging AI, and if so, why?

Whichever way you slice the silicon, things are changing fast. We’re unlikely to put the genie back in the bottle, so we need to understand and, where possible, mitigate the risks.

There are two camps of thinkers with different takes on AI’s immediate and longer-term risks – the ‘AI Alignment’ (safety first) and ‘AI Ethics’ (social justice) camps.

AI Alignment – death, self-interest and superintelligence

Some people – like Musk, Hinton and Altman – fear that AI could be turned into a weapon of mass destruction by autocrats or nations.

They also worry about AI’s own agency. No matter how carefully AI is programmed, these people think we may inadvertently design algorithms (‘Misaligned AI’) that relentlessly pursue goals contrary to our interests.

Sometimes AI’s harms will be relatively localised – though still significant – like when AI replaces human workers or even eliminates industries.

But their largest concern is that AI could get smart enough to threaten humanity itself.

Professor Nick Bostrom is the director of the Future of Humanity Institute at Oxford University.

According to these and other AI experts, “mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Hinton likened emerging AI to having “10,000 people and whenever one person learnt something, everybody automatically knew it. And that’s how these chatbots can know so much more than any one person.”

Thinkers like Swedish philosopher Professor Nick Bostrom believe that in the not-too-distant future, AI may not only match human intelligence but far outstrip it, leading to a dangerous superintelligence.

But other experts regard the risks of an AI apocalypse as “overblown.”

AI’s history reveals sharp jumps in technological capabilities that apparently heralded unlimited growth. Historically, these jumps have been bookended by long AI winters where progress slows considerably.

However, some suspect that this time will be different.

AI Ethics – prejudice and misinformation

People in the ‘AI Ethics’ camp focus on the social justice implications of AI. Algorithms with subtle biases are already seeping into everyday life.

AI can propagate misinformation, deepfakes and prejudice.

These algorithms absorb and transmit prejudices from the data used to train them.

Researchers like Emily Bender and Timnit Gebru (who was forced out of Google after raising ethical concerns about AI bias) have spoken out about how AI can propagate misinformation, deepfakes and prejudice.

For example, asking generative AI for the pronoun of a doctor in a short story is likely to return ‘he’ rather than ‘she’ or ‘they’, mirroring prejudices in society. This and other biases show up in many algorithms like those determining who gets bail and generating images based on text descriptions.

Some AI Ethics proponents suggest that most people in the AI Alignment camp represent a narrow slice of humanity. Many, they say, are wealthy white men with something to gain from playing up the existential risks while downplaying AI’s effects on minoritised groups.

Some commentators go as far as saying we should stop discussing the alleged risks of extremely intelligent AI. They say there are more urgent matters to attend to, including how AI might harm non-human animals and the environment.

We need to consider a range of risks

Each camp agrees we have reached a turning point in AI’s ability to impact the world for better and worse.

Professionals in many sectors are already integrating tools like Google’s Bard into their work.

Despite the heat in these disputes, both camps raise issues worth pondering.

Maybe we should accept some lessons from each side. For example, we could address the immediate social and environmental impacts of AI while also continuing to think about possible future safety issues created by more advanced AI.

Clearly, public interest in AI is escalating.

Politicians and governments too are turning their attention to AI. Since AI promises both significant benefits and dangers, this interest is welcome.

Whichever camp of concern speaks to you most, there is a clear and pressing need for research that considers how to ethically evolve AI.

This article was written by Simon Coghlan and Shaanan Cohney of the University of Melbourne and published by Pursuit.

Simon Coghlan is Senior Research Fellow in Digital Ethics in the School of Computing and Information Systems at the University of Melbourne. His research interests include the ethics of robots, AI, animals, and the environment.